free

Open-source Language Model Collaboration

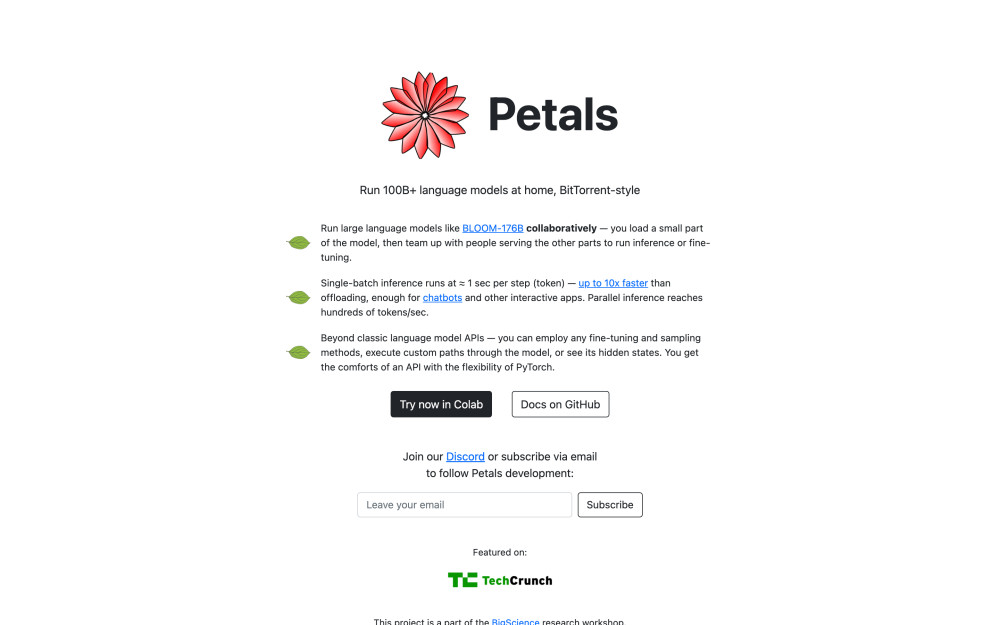

Petals is an open source tool for running large language models, such as BLOOM-176B, collaboratively in a decentralized platform. Users can load a small part of the model and team up with others serving the other parts to run inference or fine-tuning. Single-batch inference runs at approximately one second per step, up to 10x faster than offloading. Parallel inference reaches hundreds of tokens/sec. Petals offers flexibility with any fine-tuning and sampling methods, executing custom paths through the model, and viewing hidden states. It provides the comforts of an API with the flexibility of PyTorch. Users can join the waitlist for the public swarm and follow Petals development through Discord or email subscription. Petals is a part of the BigScience research workshop.Features:

- Open source tool for running large language models collaboratively

- Decentralized platform for language models

- Fast single-batch inference of approx. one second per step

- Parallel inference reaching hundreds of tokens/sec

- Flexibility with any fine-tuning and sampling methods, custom paths, and hidden states

- Comforts of an API with the flexibility of PyTorch

- Waitlist available for public swarm

- Can follow development through Discord or email subscription

- Featured in BigScience research workshop